Since a Hyperledger Fabric (HLF) network consists of many different Docker images communicating with each other, it makes sense to use tools like Kubernetes for the deployment and management of this type of network. This post is about the first hurdles when trying to combine HLF with Kubernetes from scratch.

Getting started

Where do we start? Well, looking at HLF, we see that it uses Docker Compose for quick and easy setup on your local machine. Docker Compose is a tool for defining and running multi-container Docker applications, which is great for both local development and if you use Docker Swarm for deploying and managing your containers, but not so great if we want to deploy to kubernetes.

For kubernetes, you’ll need an other type of configuration file(s) for defining your cluster. Luckily there is a tool, called kompose, which lets you convert docker-compose configuration files to kubernetes configuration files. Using this tool on the docker-compose.yaml file of this HLF-samples repository looks like this:

kompose convert -f docker-compose.yml -o kubernetes.yaml

For this setup, we will use the fabcar demo from the HLF samples repository. When using the command above on the docker-compose file of this examle, it will generate a single kubernetes.yaml file together with some warnings in the terminal:

WARN Unsupported depends_on key - ignoring

WARN Volume mount on the host "/var/run/" isn't supported - ignoring path on the host

WARN Volume mount on the host "./../chaincode" isn't supported - ignoring path on the host

WARN Volume mount on the host "./crypto-config" isn't supported - ignoring path on the host

WARN Volume mount on the host "./" isn't supported - ignoring path on the host

WARN Volume mount on the host "./crypto-config/ordererOrganizations/example.com/orderers/orderer.example.com/msp" isn't supported - ignoring path on the host

WARN Volume mount on the host "/var/run/" isn't supported - ignoring path on the host

WARN Volume mount on the host "./crypto-config/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/msp" isn't supported - ignoring path on the host

WARN Volume mount on the host "./crypto-config/peerOrganizations/org1.example.com/users" isn't supported - ignoring path on the host

Looking at the warnings, there are still some unresolved issues. If we inspect the output in the kubernetes.yaml file, we see that kompose created a lot of PersistantVolumeClaims, but no PersistentVolumes to map these claims on.

Now, we could add new PersistentVolumes to the kubernetes config, but instead we are going to try to use another kubernetes feature: secrets and configMaps. Why? Because the files that needs to be mounted from HLF are (1) certificates, keys, .. and (2) genesis block and channel configuration. Because these files fit the definition of respectively (1) secrets and (2) configMaps (debatable), it makes sense to use these kubernetes features.

For the kubernetes cluster itself, we use a GKE cluster, since these are easy to setup and resembles a production environment (compared to running a kubernetes cluster locally at least).

The HLF Orderer service

Ok, we know what we want to try. To keep our focus, we’ll only try to get the orderer image from HLF running on a kubernetes cluster.

For both the genesis.block file and the mychannel.tx file, we create a single configMap, like so:

kubectl create configmap configtx --from-file=./network/genesis.block --from-file=./network/mychannel.tx

It’s important to add both configuration files to a single configMap, since they will be copied to the same location on your container (which overlays all other present files, if any)

kubectl create secret generic msp-orderer-admincerts \

--from-file=./network/crypto-config/ordererOrganizations/example.com/orderers/orderer.example.com/msp/admincerts/Admin_example.com-cert.pem

kubectl create secret generic msp-orderer-cacerts \

--from-file=./network/crypto-config/ordererOrganizations/example.com/orderers/orderer.example.com/msp/cacerts

kubectl create secret generic msp-orderer-keystore \

--from-file=./network/crypto-config/ordererOrganizations/example.com/orderers/orderer.example.com/msp/keystore

kubectl create secret generic msp-orderer-signcerts \

--from-file=./network/crypto-config/ordererOrganizations/example.com/orderers/orderer.example.com/msp/signcerts/orderer.example.com-cert.pem

kubectl create secret generic msp-orderer-tlscacerts \

--from-file=./network/crypto-config/ordererOrganizations/example.com/orderers/orderer.example.com/msp/tlscacerts

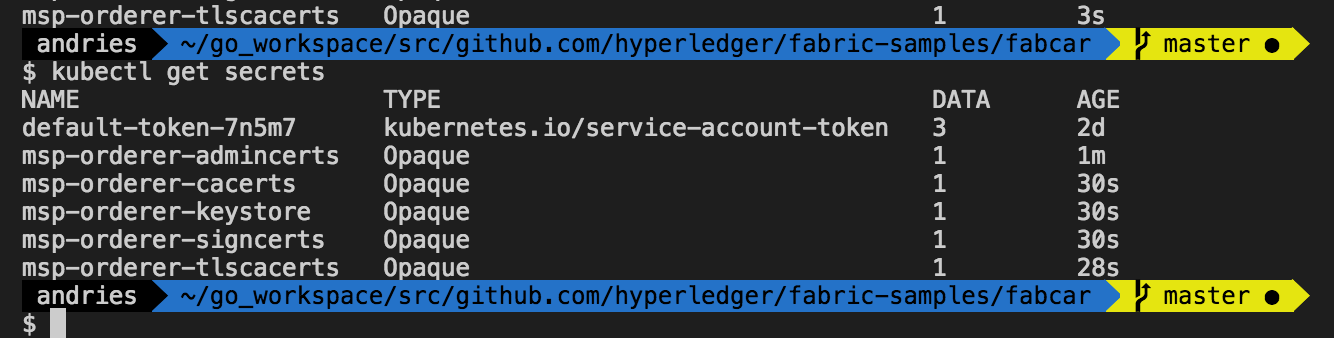

When trying to create these secrets, we already encounter a problem: Since the @ symbol cannot be used in the kubernetes secret name, we have to change the default name [email protected] to Admin_example.com-cert.pem as shown in the code above. Afterwards, we have our secrets and configMaps on our kubernetes cluster.

Deploying the orderer

Deploying the orderer consists of getting the following in your cluster:

- secrets (done)

- configMaps (done)

- kubernetes service

- kubernetes deployment → creates pods

We extracted the kubernetes service & deployment from the general kubernetes.yaml file and created a orderer.yaml file. We need to modify the kubernetes deployment to mount the secrets and configMaps to the right directories according to the original docker-compose.yaml. Now we can deploy with:

kubectl create -f ./orderer.yaml

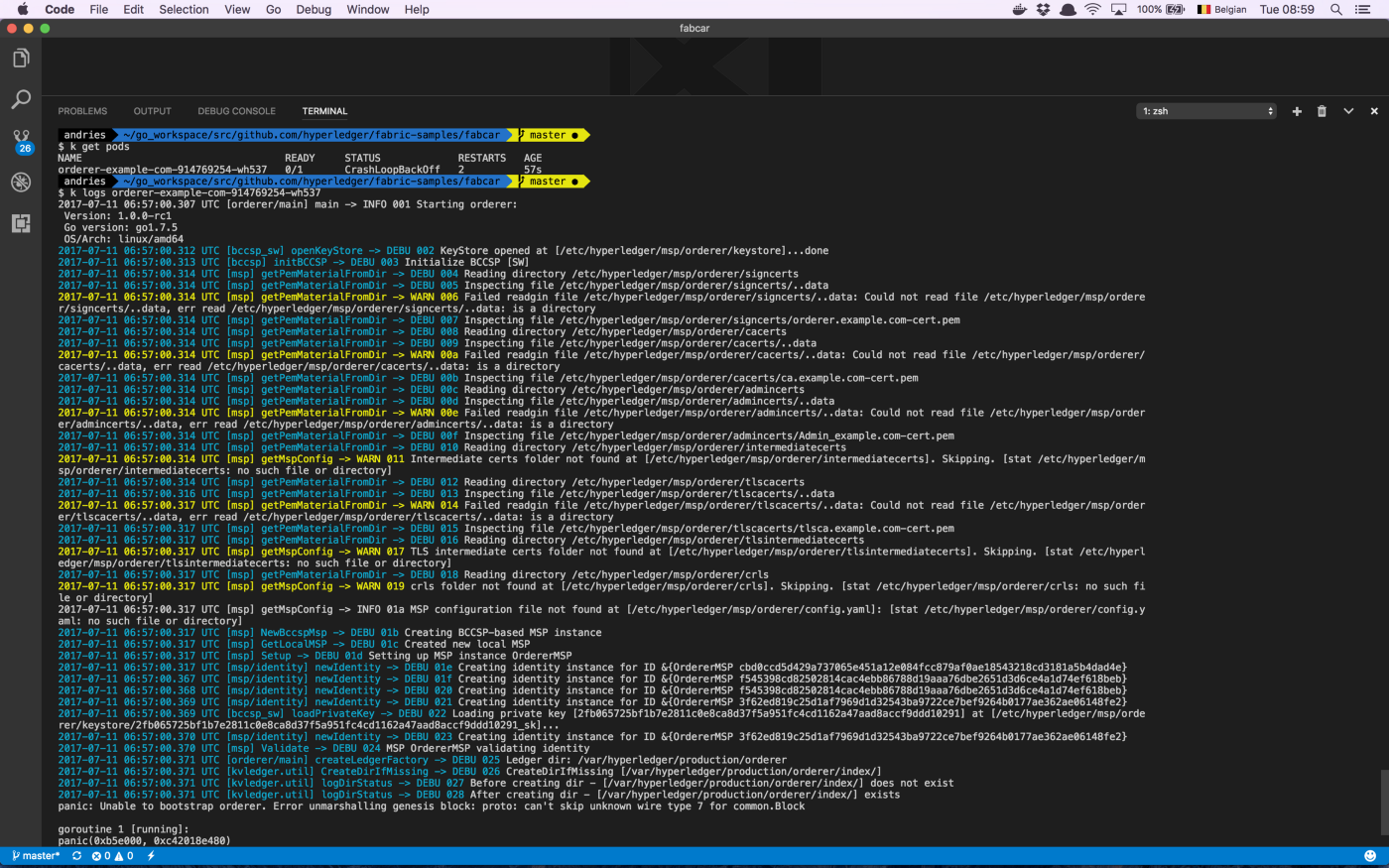

We get some errors indicating that the name ‘orderer.example.com’ is not valid because it contains dots. After changing the name, we can deploy our kubernetes service & deployment. When checking out the running pod, the pod seems to have crashed. When getting the logs, we see the following.

It seems the secrets are not mounted properly, or he cannot get to them. Because the pod crashed, connecting to it for inspection is not possible.

Mounting the secrets to a another simple pod, like the kubernetes nginx example, we can connect to it to see if the secrets and configMaps are mounted properly. When inspecting the directories where we mounted our configMap, we see the following

root@shell-demo:/usr/share/cconfig# ls -l

total 0

lrwxrwxrwx 1 root root 20 Jul 11 15:37 genesis.block -> ..data/genesis.block

lrwxrwxrwx 1 root root 19 Jul 11 15:37 mychannel.tx -> ..data/mychannel.tx

The configMaps (and secrets) are getting mounted. Only not as file directly, but as a symbolic link to a file. This explains a lot: HLF doesn’t parse symbolic links correctly in their functions like getPemMaterialForDir. By parsing the symbolic link wrongly, we see that HLF tries to parse the directory from the symlink path, instead of resolving the symlink. The get around this issue, 2 options are available:

- HLF could be updated, so it also can parse symlinks → preferable, but this requires HLF to change

- We need to step down from the idea of secrets and configmaps (sadly), and try to use PersistentVolumes instead as a general solution for mounting files to the containers with kubernetes

That’s all for now. Exploring the second option is for another post!

UPDATE 14/12/2017: On december 13, 2017, a change in HLF has been submitted, updating the getPemMaterialFromDir so that parsing symlinks becomes possible, meaning the first option would become available again!

Last modified on 2017-07-12